It’s 2018 and I want to set up a Docker Swarm cluster on Google Cloud Platform for our Production App but I can not find a definitive guide for it. Docker CE released beta support in 2017 and there is an unofficial repository by Sandeep Dinesh with easy to use scripts. Most of what I am including here is from the Docker Machine GCE Driver Docs and from our former Snr. Software Engineer Zak Elep who helped us create our container story at Galleon.PH.

It almost feels as if Docker Swarm is a fading tech and everyone is scrambling towards Kubernetes. Never the less, down the rabbit hole we go.

The Why

We converted our local developer environments from Vagrant/VirtualBox to Docker/Docker Compose last year. It works great for onboarding new developers as the setup is relatively simple compared to installing an entire VM. There are some hiccups on Windows but a lot less. It would take a new developer up to 1 week to get their environment setup for Vagrant and VirtualBox. With Docker, it took them 1 day max.

Now we wanted to complete the story with a running version in the cloud for staging and production. We decided to go with Docker Swarm first and not Kubernetes because we felt it was the quickest path to go from Docker Compose. While we definitely could run Docker Compose in Production, it does not solve a lot of things that are expected in a real production app:

- Ingress - You have to manage your ports with Docker Compose.

- Load Balancing - HA by default is not common in local development. You often find that once in Production your assumptions that it runs on a single system change.

- Redundancy, High Availability - You want to keep things online if 1 or more container/service fails. Docker Compose just starts containers, it does not restart them.

- Persistent Storage - Usually your local setup is ephemeral, its ok to be blown away. Not in production.

- Uptime - Keeping services and containers online despite failures.

These are all things you usually do not care about on your local environment.

The How

We set about creating our Docker Swarm Cluster to run everything on Google Cloud Platform (GCP). We chose GCP because we also want to use its other services like GKE but you may also use Docker Machine Drivers for other clouds and bare metal machines if those are what you prefer.

Next, we created a Docker Stack file (similar to a Kubernetes Manifest) which is a superset of the Docker Compose syntax, basically extending it with our production requirements. If you use Version 3 of the Docker Compose syntax, you can use Docker Stack and Docker Compose syntax in the same file instead of having to maintain 2 different YAML files.

Last, we built a production version of our Docker Image because we had to pin down our dependencies. You would want to host this image in a private repository or use a service like Google Container Registry (on GCP), DockerHub, Quay.io etc.

Setting Up Our Requirements

These are the main tools we will be using to set up our Docker Swarm cluster.

Google Cloud Platform (GCP) Account - Google Cloud Platform Signup

Add a billing account that comes with $300 of free usage.

GCloud CLI - Install Instructions

You may install it using

brewon OSXbrew cask install google-cloud-sdkWe will run everything using the

gcloudCLI tool.Start by initializing the Google Cloud SDK

gcloud initGoogle Cloud Platform Project - We need to create a project in order to run every we do related to our swarm in this project. Each app/website and environment you use should ideally have its own GCP Project.

The project id in this example is

docker-swarm-gcp-12345but yours should be unique.- Create the project using the

gcloudtool

gcloud projects create "docker-swarm-gcp-12345" \ --name="Docker Swarm on GCP"- Store your GCP Project Id and Default Zone in an environment variable.

# Store your Project Id in ENV vars GCP_PROJECT_ID=docker-swarm-gcp-12345 # Store your Zone Id in ENV vars GCP_ZONE_ID=us-central1-a- Enable the Google Compute Engine API for your Project Id by visiting the following URL:

https://console.developers.google.com/apis/library/compute.googleapis.com/?project=docker-swarm-gcp-12345

It will also ask you to connect a Billing Account if you haven’t already done that for your project.

- Create the project using the

Docker and Docker Machine - Docker Community Edition Install

The computer that you will be running the commands on in this article needs to have Docker installed.

- Check your Docker Engine and Docker Machine versions

$ docker version Client: Version: 17.12.0-ce API version: 1.35 Go version: go1.9.2 Git commit: c97c6d6 Built: Wed Dec 27 20:03:51 2017 OS/Arch: darwin/amd64 Server: Engine: Version: 17.12.0-ce API version: 1.35 (minimum version 1.12) Go version: go1.9.2 Git commit: c97c6d6 Built: Wed Dec 27 20:12:29 2017 OS/Arch: linux/amd64 Experimental: false docker-machine version docker-machine version 0.13.0, build 9ba6da9

Creating Our Docker Swarm Cluster

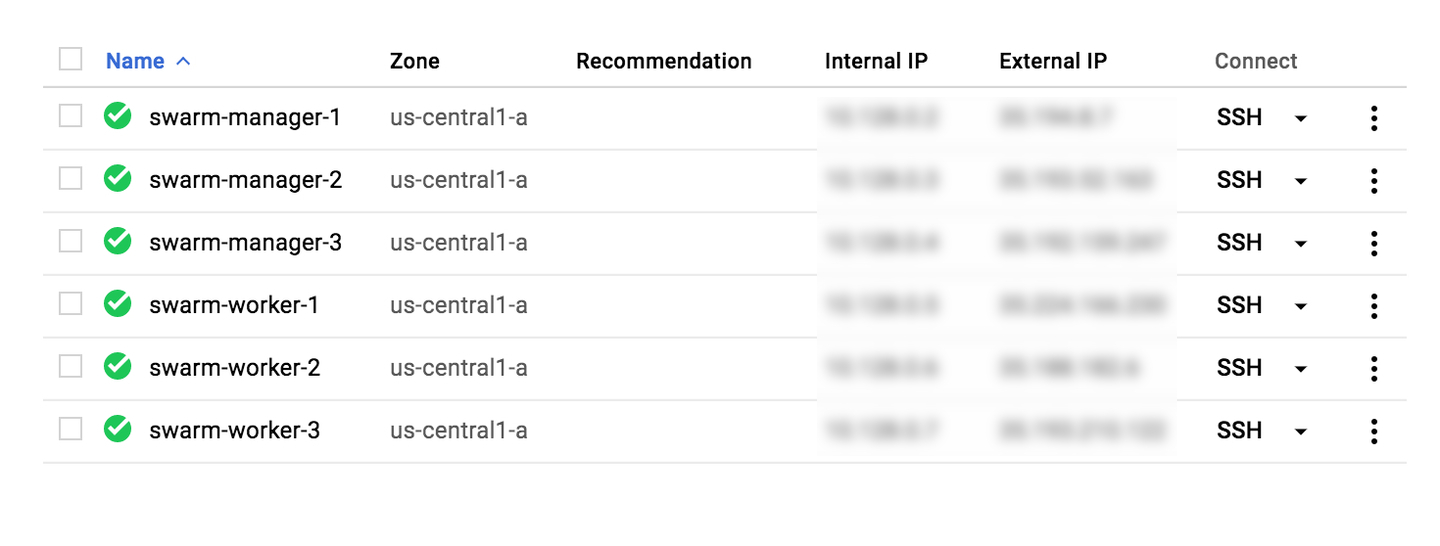

For our setup we are going to use 3 manager nodes and 3 worker nodes, but you can customize this from a single manager node up to as many worker nodes as you want. You can also add nodes later on and just add them to the cluster using the same commands below.

- Use the

gcloudtool to create Application Default Credentials which Docker Machine requires.

gcloud auth application-default login

- Create and provision the instances using the

docker-machinetool:

for m in swarm-{manager,worker}-{1,2,3}; do

docker-machine create \

--driver google \

--google-project ${GCP_PROJECT_ID} \

--google-zone ${GCP_ZONE_ID} \

--google-machine-type g1-small \

--google-tags docekr

$m

done

We will be using the

g1-smallMachine Type which comes with1 CPUand1.7GB RAM.

- Once your nodes are online you can also view and access them on the Google Cloud Platform console.

Connecting Our Docker Swarm Cluster

Once we have created and configured our Docker Swarm nodes, we need to connect them to each other so they know they are part of a swarm.

- Set up

swarm-manager-1as the master node (first manager):

FIRST_MANAGER_IP=`gcloud compute instances describe \

--project ${GCP_PROJECT_ID} \

--zone ${GCP_ZONE_ID} \

--format 'value(networkInterfaces[0].networkIP)' \

swarm-manager-1`

docker-machine ssh swarm-manager-1 sudo docker swarm init \

--advertise-addr ${FIRST_MANAGER_IP}

We need to fetch internal IP of the first manager node since all nodes can only connect over the internal IPs on Google Cloud Platform.

- Add the worker nodes to our swarm:

$ WORKER_TOKEN=`docker-machine ssh swarm-manager-1 sudo docker swarm join-token worker | grep token | awk '{ print $5 }'`

$ for m in swarm-worker-{1,2,3}; do

docker-machine ssh $m sudo docker swarm join \

--token $WORKER_TOKEN \

${FIRST_MANAGER_IP}:2377

done

This node joined a swarm as a worker.

This node joined a swarm as a worker.

This node joined a swarm as a worker.

We use

sudobefore all our SSH commands run by docker-machine because the defaultdocker-userdoes not have access to the Docker engine that was installed on the servers.

- For the two remaining manager nodes, get the token for their class and add them to the cluster:

$ MANAGER_TOKEN=`docker-machine ssh swarm-manager-1 sudo docker swarm join-token manager | grep token | awk '{ print $5 }'`

$ for m in swarm-manager-{2,3}; do

docker-machine ssh $m sudo docker swarm join \

--token $MANAGER_TOKEN \

${FIRST_MANAGER_IP}:2377

done

This node joined a swarm as a manager.

This node joined a swarm as a manager.

Confirm the swarm cluster running via

docker node lsanddocker infoon the 1st manager:

$ docker-machine ssh swarm-manager-1 sudo docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

zls6ac0kaaxq6rvd0sd1fwbot * swarm-manager-1 Ready Active Leader

w8j7h5an21kjy7gv14nvfgryq swarm-manager-2 Ready Active Reachable

ncitu37ypxt58969t7m85r5p2 swarm-manager-3 Ready Active Reachable

h2t7elv7rl61ipuj50wlri64k swarm-worker-1 Ready Active

lfq6m680rfli9owdnodqmvhek swarm-worker-2 Ready Active

l67z85w444d2yyqawgh9my1lq swarm-worker-3 Ready Active

$ docker-machine ssh swarm-manager-1 sudo docker info

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 18.02.0-ce

...

Running a Stack on the Docker Swarm Cluster

While new releases of Docker Engine/Swarm now support running stacks off of docker-compose.yml directly, this only works for the simple cases where there are no docker config or docker secrets involved. You want to use the Version 3 of Docker Compose syntax so you can use both syntaxes in the same file.

See this docker-compose-sample.yml taken from the official docs as a sample application we can run in our cluster.

- Deploy the

docker-compose-sample.ymlstack to our Swarm

curl -L -o docker-compose-sample.yml https://gist.githubusercontent.com/kzap/e8581b56a16791387e792ce7cd560201/raw/e4d0171e9d548e237a8f65055aec18b9ff33de9e/docker-compose-sample.yml

eval $(docker-machine env swarm-manager-1)

docker stack deploy -c docker-compose-sample.yml sample-app

We use

eval $(docker-machine env swarm-manager-1)to set the context of our Docker client on our machine to that of the 1st Manager instance in our swarm. This is so we can run any command on it and control it from our machine.To unset this and go back to using our regular Docker on our local machine use

eval $(docker-machine env -u)

- Check the status of our Docker Swarm Services running our Stack

docker stack ls

docker stack ps sample-app

docker service ls

Access our sample-app

Google Compute Engine has most ports blocked by default, so we can not access this app from outside our instances unless we open the ports using the GCP Firewall. We can do this using a single command line:

gcloud compute firewall-rules create docker-stack-sample-app-ports \

--project=${GCP_PROJECT_ID} \

--description="Allow Docker Stack Sample App Ports" \

--direction=INGRESS \

--priority=1000 \

--network=default \

--action=ALLOW \

--rules=tcp:5000,tcp:5001,tcp:8080,tcp:30000 \

--target-tags=docker-machine

Now we can access the services of our sample-app by using any of the IPs in our cluster. It does not matter what IP we use, it acts as a mesh network and you will be routed to wherever there is container running on that port.

We use

docker-machine ip swarm-manager-1to get the public IP of our 1st Manager Node

# cURL Visualizer app to see where all the containers are

curl -I http://`docker-machine ip swarm-manager-1`:8080

# cURL sample frontend app and cast a vote

curl -I http://`docker-machine ip swarm-manager-1`:5000

# cURL sample backend app and see results of the vote

curl -I http://`docker-machine ip swarm-manager-1`:5001

Cleaning Up The Cluster

To delete your cluster and go back to zero, just use the docker-machine rm command:

for m in swarm-{manager,worker}-{1,2,3}; do

docker-machine rm -y $m

done

TLDR

We set up our own Docker Swarm cluster on Google Cloud Platform and now can run as many applications / Docker Stack apps as can fit. In the end, though it was quite a lot of work and we are not in the business of maintaining infrastructure. Having platforms like these to run distributed applications on is awesome but maintaining it can really feel like a full-time position. We are already looking at other options to running in production that doesn’t have us managing the machines. (K8s perhaps?)

What’s Next?

While we deployed a stack with a bunch of services to our Docker Swarm, the next step is how to allow others to do this, or to repeat this steps for different environments. How do you even share access to the Docker Swarm setup with other developers or your CI?

You can use this tool machine-share to import and export your credential certificates.

If you would like an interface to manage your different Docker and Docker Swarm environments, you can run Portainer (on Docker?) and give access to various team members. There are actually a bunch of ways you might want to share access and make deploying to your swarm easier.

The Cloud Native (buzzword) world has moved on and declared Kubernetes the standard way to run distributed containerized apps. So if you want to run a containerized app/website in the cloud, that is what you should focus on since you just have to build the manifest file and set up your own cluster on GCP, AWS or Azure with a few clicks or a single command line.

Nevertheless, there are always those like me who like to learn how to do things the hard way before doing it the easy way (hard headed I’ve been told). I hope this guide was of use to someone out there and if you have questions or suggestions or just want to say Hi!, hit me up on Twitter @kzapkzap.